CS Education tools that emphasize students' process over product

With the current wave of concern around ChatGPT in education, including recent work showing that MOSS can be fooled by Large Language Model (LLM) generated code (Biderman & Raff, 2022), it is beyond time for CS Education tools to emphasize students' process over product.

While there are some tools that give a qualitative description of the programming process such as Codio’s own Code Playback which shows key-stroke level changes or ZyBooks’ Coding Trail feature which summarizes student code runs, no teacher has time to manually review all their student submissions – especially when teaching at scale. Instead, we need to bring the promise of behavioral metrics being explored by researchers (e.g. Watwin, NPSM, RED, Error Quotient) seamlessly into real-world tools.

Introducing, Codio’s new Behavior Insights:

.gif?width=750&height=433&name=ezgif.com-video-to-gif%20(6).gif)

- Quickly sort students so you only review submissions that need it

- See raw metrics compared to threshold values, selected based on thousands of assignments

- Read data narratives below each tile providing interpretation support

- Click to see student pastes contextualized in their process

- “Ignore” insights that you find unconcerning

Ready to try it for yourself? It’s now live in Codio!

.gif?width=750&height=433&name=ezgif.com-video-to-gif%20(7).gif)

Turn it on in your course under Grading > Basic Settings and click Save Changes.

Detecting Plagiarism in Computing Exams

Currently, the indicators and corresponding dashboard are configured to detect plagiarism. On the student progress screen, you can filter and sort students by their Behavior indicator. There are three levels of the indicator: low, medium, and high.

.png?width=750&height=239&name=pasted%20image%200%20(1).png)

Clicking on a behavior indicator opens the Behavior Insights dashboard, which consists of up to five tiles:

The dashboard will only show tiles that are above or below their respective thresholds – so if you click on a low indicator you will see fewer tiles than if you click on a high indicator.

Chen and colleagues (2018) found that, on average, 5.5% of students cheat on asynchronous exams, with no more than 10% on any given exam (as shown in Chen, West & Zilles, 2018 - Figure 4, reproduced below).

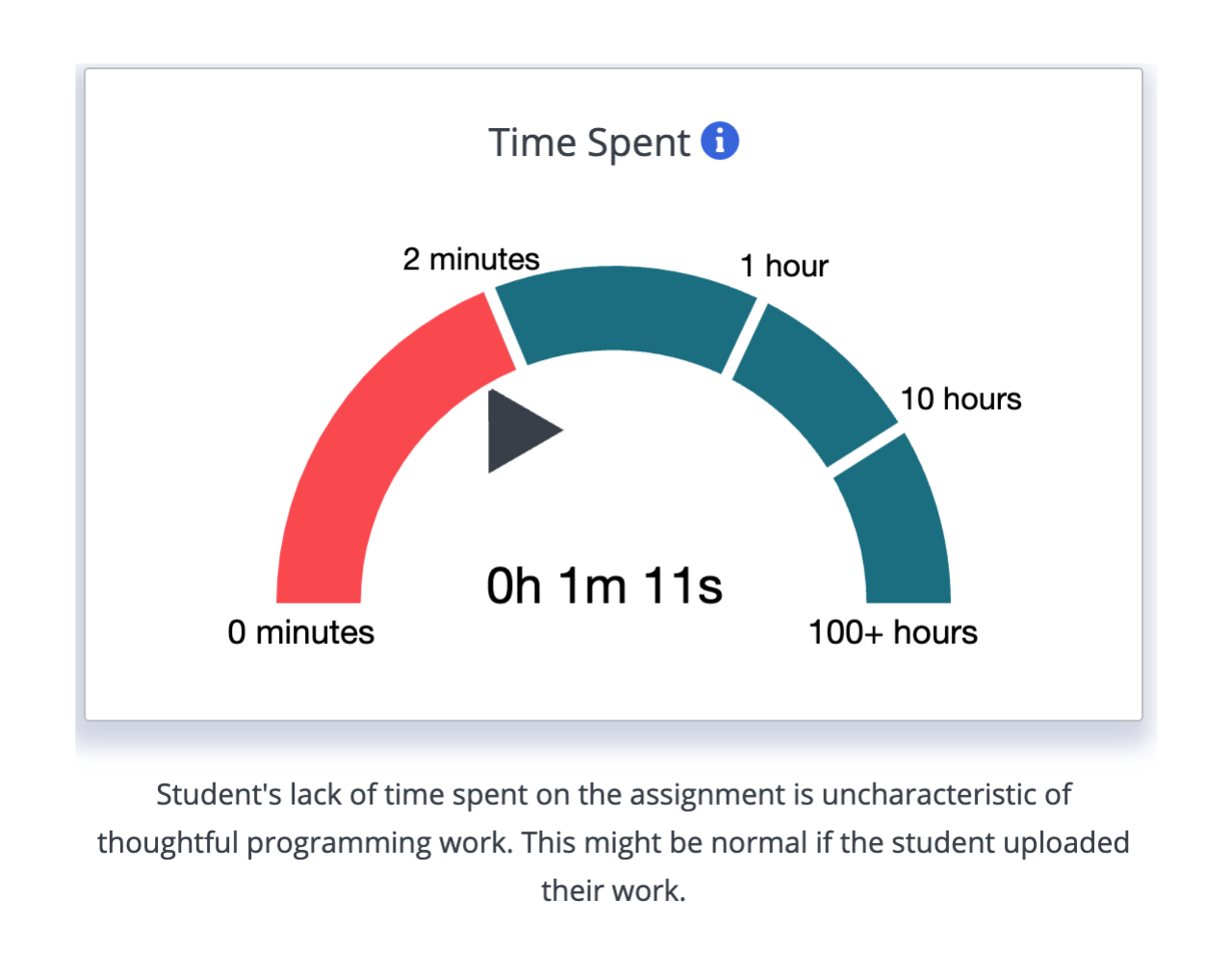

Time Spent

The first tile was inspired by teachers who already used Codio who reported to us that they often sort the student progress dashboard by time spent. The time spent tile shows the amount of time the student spent on the assignment in the middle, mapped to a log scale.

Based on thousands of student submissions, we determined a generalized threshold of submission under 2 minutes had a high likelihood of being plagiarized (as represented by the red region).

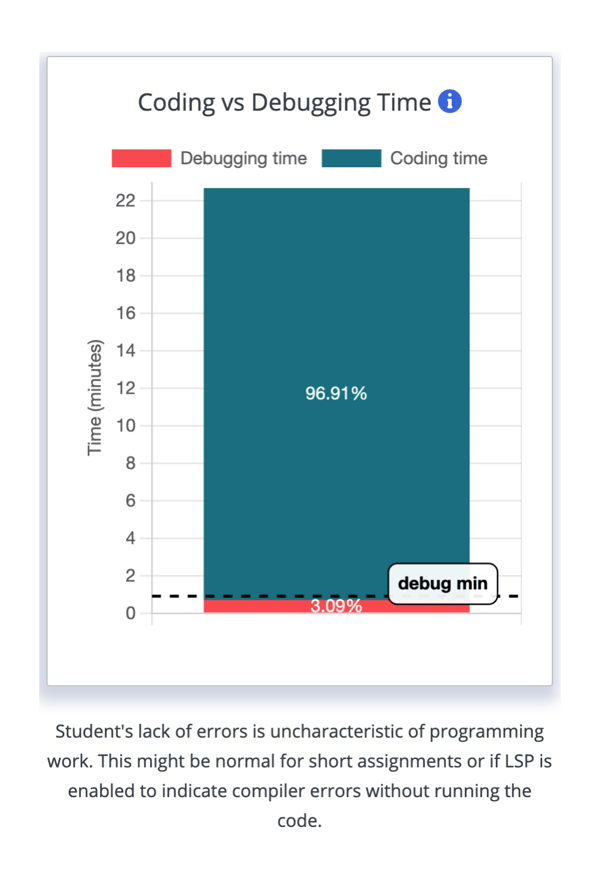

Coding vs Debugging Time

The idea of error vs error-free state has been explored by previous work such as the development of Error Quotient (Jadud, 2006) and Watwin Scores (Watson, Li, & Goodwin, 2013). We assume that students who have been presented with an error are working on resolving that error – a process often referred to as “debugging”. All other time spent working, before a run has been attempted or after an error-free run, is considered “coding” time.

In the context of detecting plagiarism, it would be odd for students to never have errors or spend time trying to resolve them. Based on thousands of student submissions, we determined a generalized threshold of less then 4% of the time in an error state (i.e. “debugging”) had a high likelihood of being plagiarized (as represented by the dotted line).

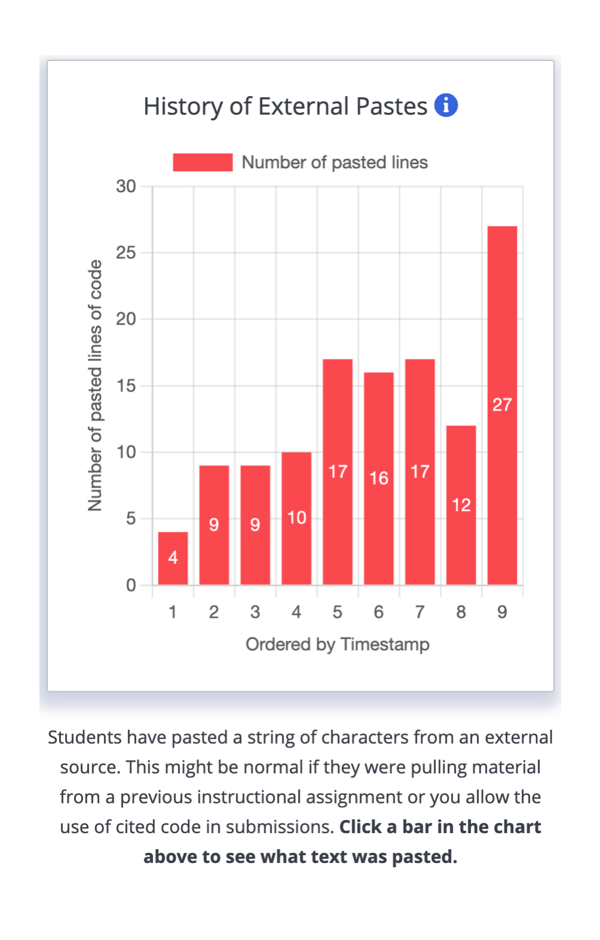

History of External Pastes

Detecting pastes is common practice (such as HackerRank’s implementation) for identifying plagiarism. However, pasting is common during development - particularly when refactoring or pulling template code from the instructional content. For this reason, we check each detected paste for its presence in the assignment’s files (or the file's previous states). When ordering each paste event by timestamp, we get a history of external pastes.

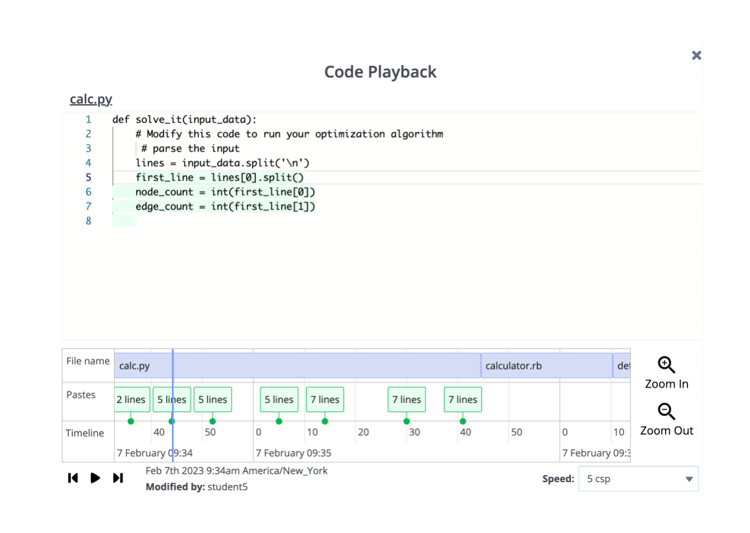

Multi-File CodePlayback with Pastes

Many assignments contain multiple files, so we added multi-file support to code playback. As seen below, a file name section shows how students move between different files while programming.

Clicking on an indicated paste will bring you to it on the timeline, and you can review the pasted text, highlighted in green, within the context of the code file in the top pane. Timeline navigation can be accomplished by playing the player, dragging the timeline, or using the zoom buttons on the right of the timeline.

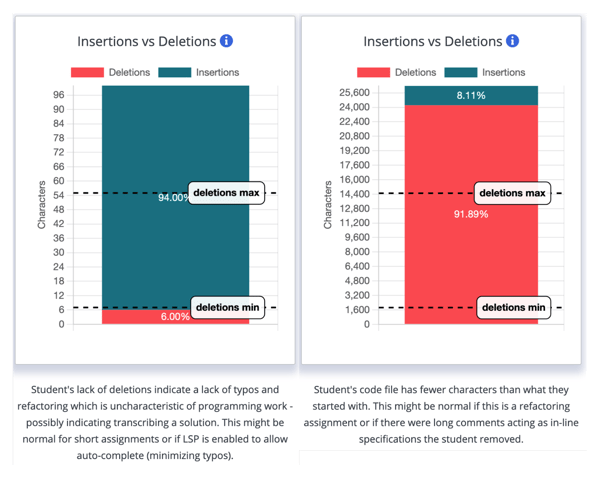

Insertions vs Deletions

It would be difficult to program without making any deletions. Low deletions within the context of plagiarism detection could indicate transcription. Additionally, Yee-King, Grierson, & d'Inverno, (2017) found that creative programming assignments had more deletions than traditional programming assignments - meaning that more deletions could indicate the tinkering and exploration while programming we might expect students to be doing.

Based on thousands of student submissions, we determined a generalized threshold of submission under 7% deletions had a high likelihood of being plagiarized (as represented by deletions minimum dotted line). We also flag submissions with over 55% deletion as they are an interesting case – all submissions with over 50% deletions indicate that the student wound up with less code than what they started with.

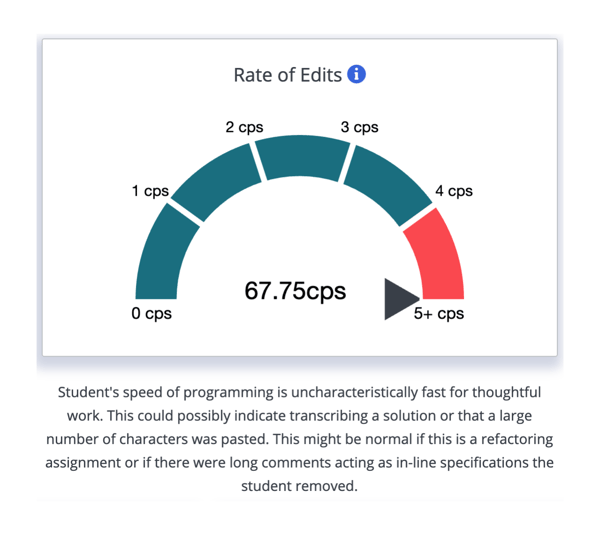

Rate of Edits (Characters per Second)

The speed of programming has been investigated to predict programming performance (Thomas, Karahasanovic, & Kennedy, 2005) and capture student engagement (Edwards, Hart, & Warren, 2022). While students will have different typing speeds, thoughtful work slows typing speeds down. Based on thousands of student submissions, we determined a generalized threshold of submissions created with a pace of more than 4 characters edited (inserted or deleted) per second had a high likelihood of being plagiarized (as represented by the red region).

Who can see Behavior Insights?

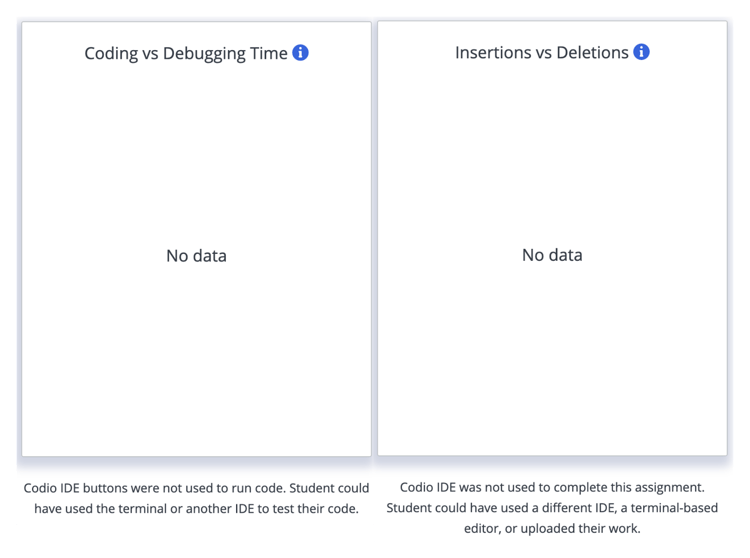

Those using Codio’s built-in IDE should see dashboards and tiles similar to the above once the feature is turned on at the course level, but if you have students working in a 3rd party IDE inside Codio or simply uploading their code that they developed locally, you will miss some of these rich insights and instead see “No data” as shown:

Currently, Behavior Insights is only for non-Pair Programming assignments.

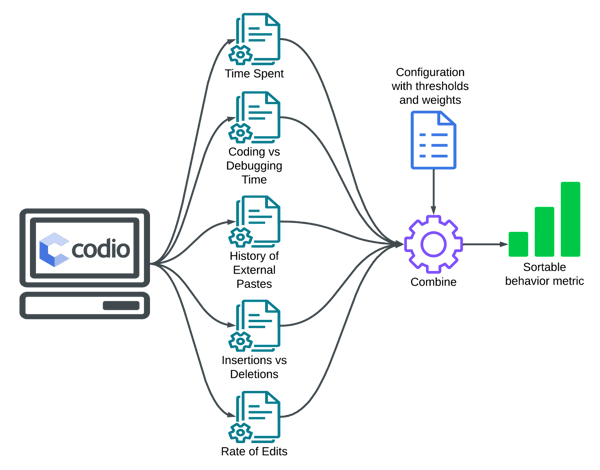

What if I don’t care about Plagiarism? Can I use this for other behaviors?

Yes! Read about configuring Behavior Insights to your needs here.

The same intermediate measures with different thresholds could capture students who are exerting too much effort (e.g. struggling) as opposed to their current setting of capturing too little effort (e.g. cheating).

Going beyond effort, Codio is working on adding measures and opening up threshold configuration to allow teachers to capture an unlimited number of behaviors from struggling to creativity to frustration. While this flexibility aligns with Codio’s general approach of putting all customization possible into the hands of the teacher, we also know that the teacher, who knows the context of the course, will be able to set more useful thresholds than generic, at-scale thresholds. Our past work (Chandarana & Deitrick, 2022; Chandarana & Deitrick, 2023) has shown that many promising behavior-based metrics from research work lose power when scaled across contexts.

Try out Behavior Insights configured for Plagiarism today by turning it on in your course under Grading > Basic Settings:

-1.gif?width=750&height=433&name=ezgif.com-video-to-gif%20(7)-1.gif)

***Patent Pending***

References

Biderman, S., & Raff, E. (2022, October). Fooling moss detection with pretrained language models. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management (pp. 2933-2943).

Chandarana, M., & Deitrick, E. (2022, June). Challenges of Scaling Programming-based Behavioral Metrics. In Proceedings of the Ninth ACM Conference on Learning@ Scale (pp. 304-308).

Chandarana, M., & Deitrick, E. (2023, March). Exploring Error State in "Time-on-Task" Calculations at Scale. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education (pp. 1336).

Edwards, J., Hart, K., & Warren, C. (2022, February). A practical model of student engagement while programming. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1 (pp. 558-564).

Jadud, M. C. (2006, September). Methods and tools for exploring novice compilation behaviour. In Proceedings of the second international workshop on Computing education research (pp. 73-84).

Thomas, R. C., Karahasanovic, A., & Kennedy, G. E. (2005, January). An investigation into keystroke latency metrics as an indicator of programming performance. In Proceedings of the 7th Australasian conference on Computing education-Volume 42 (pp. 127-134).

Watson, C., Li, F. W., & Godwin, J. L. (2013, July). Predicting performance in an introductory programming course by logging and analyzing student programming behavior. In 2013 IEEE 13th international conference on advanced learning technologies (pp. 319-323). IEEE.

Yee-King, M., Grierson, M., & d'Inverno, M. (2017). Evidencing the value of inquiry based, constructionist learning for student coders. International Journal of Engineering Pedagogy, 7(3), 109-129