As computer science program enrollments soar, instructors are asked to scale their courses and move them online. There are a number of benefits of moving courses online, however, it also raises concerns about academic integrity, particularly around computer science assessment tests.

A successful and effective computer science assessment test will determine a student's knowledge of core computer science concepts, problem-solving abilities, and critical thinking skills. They provide valuable insights into a student's approach to a subject matter.

Benefits of Computer-based Exams

Computer science assessment tests are practice tests that need to measure and track a student's ability to remember concepts, interpret information, apply these concepts, and write code in the necessary environment.

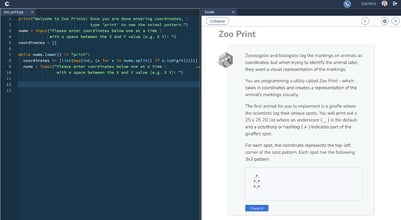

In computer science, one of the main benefits of a computer-based assessment test is allowing students to write code in an actual development environment. With access to a compiler and the ability to run and test their code, practice tests can become more effective.

For many topics we cover in computer science, the level of mastery we want students to reach is applying the concept to code. Therefore, it makes sense that their computer science assessment tests ask them to write code and exhibit their problem-solving skills.

Additionally, with CS tests being on a computer, students do not need to physically be in a given place at a given time. Students can take the CS assessment test at their convenience during a pre-set window. This is particularly helpful for online courses where students may not all be in the same time zone. Instructors can put a time limit on how long the CS test is within that window to ensure all students have the same amount of time.

Finally, moving computer science assessment tests to a computer enables auto-grading and instant feedback. Auto-graders mean less work for instructors and TAs after an online test, which frees them up to focus on helping students or on other tasks. Auto-graded test results also mean students can have timely feedback on their assessments.

Deploying Unique Computer Science Assessment Tests

Despite all of these benefits, there is still hesitancy about migrating content CS assessment tests online due to academic integrity concerns. One approach to this is individualizing student exams. Codio has a few exam proctoring settings to help without making any changes to the actual exam.

The first is the ability to shuffle Guides pages. By placing each computer science assessment test on its own Guides page, you can present each student with a randomized ordering of questions. This can be done by toggling on the Shuffle Question Order setting. This makes it harder for students to collude as a student's first question is different from other students'.

Additionally, instructors can toggle on Forward Only Navigation which means students can not re-visit CS assessment tests.

Another way to deploy unique computer science assessment tests is to randomize which assessments or sets of questions a student sees.

Randomized Assessments

Codio enables instructors to add Random Assessments. Simply specify the list of tag values, and Codio will pull an assessment test matching those criteria from the associated assessment library.

As computer science assessment tests are added to the assessments library, instructors can synchronize their assignments with a single click. This ensures they are pulling from the full breadth of the assessment library.

Codio's Global Assessment Library

Instead of starting from scratch, instructors can pull from Codio’s existing Global assessment library. All the assessments in Codio’s Global assessment library are auto-graded and each assessment is tagged by:

- Programming language

- Assessment type

- Category (topic-level)

- Content (sub-topic level)

- Learning Objective (in SWBAT form - "Students will be able to….")

- Bloom's Taxonomy level

Bloom's taxonomy is a way to represent the level of mastery of the content being assessed. See the graphic below for a description of each level and a list of common verbs associated with that level.

For example, when assessing a student's knowledge of loops, different online tests reveal different levels of mastery.

|

Learning Objective |

Bloom's Level |

|

List the different types of loops in Java |

Remember |

|

Select the type of loop to use given the described use case |

Understand |

|

Implement an iterative algorithm to calculate factorials |

Apply |

|

Design a piece of turtle art using a loop |

Create |

Amira and colleagues (2018) described how generating exams with questions standardized to a learning taxonomy such as Bloom’s helps ensure alignment between course learning objectives and assessments.

Create your own Assessment Library

Codio also allows instructors to create their own assessment libraries. Assessment libraries are associated with an organization, making it easy for instructors who teach different sections of the same course to collaborate on a set of questions for a question pool. Assessment libraries also work for a number of people who teach different variations of the same course (e.g. CS1 for majors and CS1 for non-majors).

Unlike many other tools which allow the creation of question banks, Codio allows you to save the entire page layout and associated files as a single assessment in the library. This gives you greater control over the computer science assessment tests you create.

Within an assessment library, an assessment can have a simple layout - which saves just the contents of the assessment, or a complex layout, which saves the entire page. This allows you to easily save full coding exercises with auto-grading scripts and starter code.

Are Randomized Exams Fair?

One concern about a random set of exam questions is ensuring an equivalent level of difficulty across the generated exams. Fowler and colleagues (2022) studied randomized assessments in a CS0 and Data Structures course and found "the apparent fairness of the generated exam permutations is reasonably pleasing. The worst semester in this respect was Fall 2019, but even this variance in score is less than half a letter grade."

References

Amria, A., Ewais, A., & Hodrob, R. (2018, January). A Framework for Automatic Exam Generation based on Intended Learning Outcomes. In CSEDU (1) (pp. 474-480).

Fowler, M., Smith IV, D. H., Emeka, C., West, M., & Zilles, C. (2022, February). Are We Fair? Quantifying Score Impacts of Computer Science Exams with Randomized Question Pools. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1 (pp. 647-653).