In Mo Gawdat’s best-selling book “Scary Smart”1, the former X (previously known as Google X) Chief Business Officer argues that our future will witness three inevitable AI events: firstly, AI will happen, there’s no stopping it; secondly, AI will be more intelligent than humans; and thirdly, mistakes that bring about hardship will take place. He argues that machines will possess three fiercely debated qualities: consciousness, emotion, and ethics.

While Gawdat’s pronouncements may seem alarmist, it’s clear that the advance of AI is happening at a blistering pace and forcing industry leaders, politicians, and communities worldwide to react - from urgent calls for summits to debate governance to apocalyptic warnings2 for caution from the “godfathers” of AI.

Codio is working hard to help lead the way in harnessing AI in computing education.

AI in Computing Education

Against this backdrop of explosive growth in generative AI, computer science education is under the spotlight as colleges and universities reel as “the Chegg problem” is superseded by “the ChatGPT” problem—with academics debating and researching how CS Ed should evolve. For example, can AI lower barriers to equitable participation in computing education? Will AI tools such as GitHub Copilot and ChatGPT require an overhaul of computing education as we know it?

Former Harvard University Professor of Computer Science, Matt Welsh, now Founder of fixie.ai, declared “The End of Programming” in his ACM Communication3 of January 2023, where he argues that “programming will become obsolete,” that the conventional idea of "writing a program" is “headed for extinction,” and that “for all but very specialized applications, most software, as we know it, will be replaced by AI systems.”

Welsh argues: “In the future, CS students are not going to need to learn such mundane skills as how to add a node to a binary tree or code in C++. That kind of education will be antiquated” and that “the vast majority of Classical CS becomes irrelevant when our focus turns to teaching intelligent machines rather than directly programming them. Programming, in the conventional sense, will, in fact, be dead.”

“CS as a field is in for a pretty major upheaval few of us are really prepared for.” - Welsh

However, we have seen this pattern before with the wave of no-code tools. While Squarespace has become a household name with Wix and Bubble, computing education still has many textbooks and course materials dedicated to developing from-scratch websites.

The revolutionary technology did not change what was taught in computing education – but instead, how it was taught. The no-code movement brought about pedagogical tools such as block-based programming and parsons problems4 in computing education - both of which we know can lower the barrier to success in computer science education.

Harvard University's flagship CS50 course has taken this pedagogical adoption route - reporting5 an AI makeover with plans to use AI to grade assignments and provide coding instruction and personalized learning tips.

In a similar vein, a principal researcher at Github, Andrew Rice (also a professor of computer science at the University of Cambridge), writing about Github’s own Copilot, suggests6: “A tool that provides suggestions for coding problems could really lower the barriers to entry for programming and give people a more rewarding experience as they learn.”

Another Ivy League professor we spoke to suggested their computer science department may take a site license to incorporate AI tools into the student experience, acknowledging that students will adopt these tools regardless - also highlighting that CS education will need to evolve the foundations on which students are assessed and even what they are assessed on.

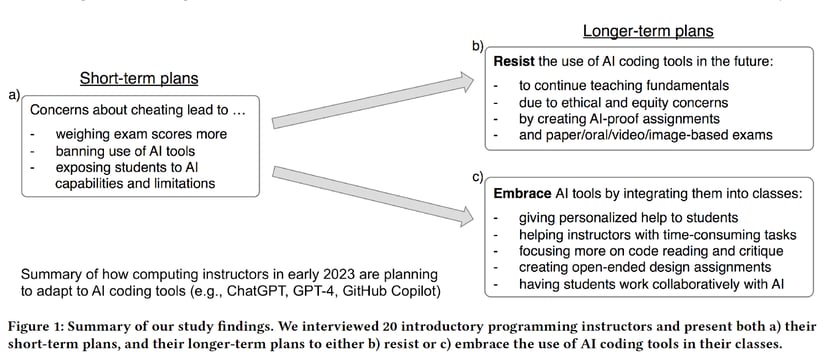

This idea of embracing AI for better educational outcomes is one longer-term approach we hear teachers taking. Sam Lau and Phillip Guo, in a forthcoming ICER paper7, summarize 20 CS1 professor’s thoughts into the following model:

While depicted as an either-or, we see these approaches as two ends of a spectrum, with most professors landing somewhere in the middle. For platforms like Codio, this means building a broad set of AI tools to help teachers balance the institutional concerns about academic integrity with the industry demand for efficient, AI-collaborative developers.

An AI path forward for Codio

Codio aims to eliminate barriers to success for computing learners, envisioning a world where anyone can access engaging computing education.

To make this a reality, we’ve long taken a research-informed approach to building flexible, evidence-based tools and resources for computing and STEM teachers and professionals that enable better learner outcomes. Despite the newness of these tools, many have already studied their effectiveness as pedagogical enhancements - with some already publishing pilot study results from their classrooms.

Codio’s Vice President of Product and Partnerships, Elise Deitrick, Ph.D., explains that there are several things that AI won’t do in the near future. First, she says, “AI is never going to replace teachers. You have to think about teachers as being these holistic guides who aren’t there just to provide information you can find on Google or in a textbook.

They're there to help you grow as a person and become a participant in a community.”

Additionally, Dr. Deitrick explains AI will always be following. “AI models have to be trained from existing information in order to operate; there always needs to be someone pushing that boundary. There always has to be innovation. And AI is always going to be one step behind.

Even now, when you ask it to give you code, often (but not always), it'll give you functional code. But most people who are working on computing education curricula right now are trying to push learning towards more than just functional code, but good code that is scalable, efficient, accessible, readable, and secure. There are different levels of done.”

Additionally, with the rise of AI-created content, training models have become more challenging. “Anyone who's taught and had to copy handouts knows you have to keep the original because if you copy a copy of a copy, the fidelity gets destroyed.”

Ultimately, Dr. Deitrick concludes, AI will “be great at speeding things up, but I just don't ever see it getting past that point in anytime soon.”

Instead of simply creating a plugin for ChatGPT or rushing to integrate it into the Codio platform, as many companies are doing, our approach to AI remains the same as our other product advancements—to stand on the shoulders of giants.

Guiding Principles for Ethical AI Adoption

A recent systematic review8 of LLMs' practical and ethical challenges in Education suggests more attention should be paid to ethical issues. Out of the 118 peer-reviewed studies evaluated, Yan et al. reported that “most of the existing LLMs-based innovations (92%) were only transparent to AI researchers and practitioners, with only nine studies that can be considered transparent to educational technology experts and enthusiasts”.

They attributed this low transparency to “the lack of human-in-the-loop components in prior studies.” This finding strongly resonates with the call to action9 from the Office of Educational Technology (U.S. Dept. of Education), stating that “teachers and other people must be “in the loop” whenever AI is applied to notice patterns and automate educational processes.”

Transparency concerns go beyond LLMs' use cases and implementations, from how AI models (including LLMs) should be designed to the datasets on which these models are trained. Datasets frequently reflect historical patterns, and any AI model built using these datasets can reproduce those patterns10. This exemplifies the need for explainable AI and more visibility in the dataset origin and creation processes.

So as we think about how tools that support computer science students evolve to embrace AI, we believe it’s essential to adhere to some guiding principles, including:

- Ensuring teacher control: AI should help lower barriers to successful outcomes in computing education, but context is everything, and we think teachers should remain in control of how AI is used in their course delivery, especially concerning improving learning experiences for students;

- Keeping humans in the loop: When generative AI is used in educational contexts, it’s essential that its use does not cause students to receive inaccurate information or feedback – which cannot be guaranteed with today’s hallucinating models. Instead, all AI-generated output should be teacher-facing or reviewed by an instructional team member.

- Transparency on dataset and practices: As we research our product development and bring new AI-driven tools into the product experience, we want to be transparent about the origin of datasets used and the approaches we’re taking to use AI in the product. As we build new features, we intend to be mindful of ethical considerations of transparency, privacy, equality, and beneficence.

Codio and Near-Term AI Product Opportunities

While recent computing education studies have illustrated how LLMs can enhance learning experiences, researchers have also highlighted concerns about usefulness, accuracy, datasets, and readiness for “at scale” deployment.

Codio sees significant potential to harness AI to advance computing and tech skills education.

Following our guiding principles, we can envision many opportunities within Codio’s platform to enhance/improve learning experiences while avoiding potential pitfalls.

- Error message augmentation

- Syllabus-based AI-assisted course creation

- AI grading assist

- AI assessment checker

- Hint bot

Error Message Augmentation

Since the 1960s, researchers have explored making programming error messages more novice-friendly. Students spend significant time reading and trying to understand these messages, and they play an essential role in their learning journey. However, PEMs can still be notoriously tricky to understand, so making PEMs easier to understand and interpret, at scale, across multiple languages remains a challenge.

The ACM’s SIGCSE 2023 provided a glimpse into what might be possible. A paper9 by Leinonen, Reeves, Hellas, Denny, Becker, Sarsa, and Prather explored using LLMs to enhance PEMs. The researchers took some Python error messages reported to be the most unreadable, constructed example programs, and then used Codex’s code-davinci-002-model utilizing a variety of prompts to produce PEMs analyzing the outputs against criteria that judged whether the PEMs were: comprehensible, contained unnecessary content, contained a correct explanation and fix, and whether the PEM was overall an improvement.

Results varied, and the researchers found that explanations generated were considered an improvement over the original PEM in about half of the cases, rising to 70% using a temperature value of 0. Still, only about half of these explanations were deemed correct. However, with “fixes,"—these were generated in 70% of cases. Still, less than half (47%) were correct, highlighting the current limitations of using LLMs at scale and across languages with a high degree of completeness, improvement, and fix accuracy.

Another paper10 explored the use of LLM-generated code explanations in a web software development e-text, finding many limitations affecting students’ perceptions of their usefulness, e.g., where students rated explanations as low quality, this seemed to relate to explanations being overly detailed or the wrong type of explanation, or the explanation mixed code and explanatory text.

The authors highlighted prompt engineering, measures to improve learner engagement, live code explanations, and browser-based course materials (versus mere e-text) as potential foci for future advances, suggesting a “human-in-the-loop” approach as a viable path forward.

We can build on the promise of the studies above while addressing the lower-than-desired accuracy rate by providing the LLM with a pre-written list of error message augmentations. The LLM would then only need to select which piece of human-authored feedback would be appropriate given the context. Additionally, because Codio is a full IDE, we can go beyond simple text output and highlight the code editor's lines that need fixing to draw the student more clearly/seamlessly to the bug.

Syllabus-based AI-assisted course creation

Instructors are often time-poor and resource constrained when producing and keeping current their course texts and assessments, exacerbated by a situation where solutions to exercises are frequently published and shared online. Time and resource constraints are one of the reasons why we offer course authoring and migration services and why the work of our Success team is so valued, as they effectively become an extension of your department, school, or organization.

As Codio’s resource library expands, and with resources enhanced to become increasingly “evergreen” with features such as parameterized questions that allow for more individualized assessments—we see potential to auto-generate a complete set of course materials in the form of a syllabus-based course creator where the automated customizer parses an instructor’s course syllabus and then builds a course from Codio’s existing resource library.

Beyond full-course texts, we also see a role for LLMs to assist with generating programming exercises and code explanations. Recent research by Sarsa, Hellas, Denny, and Leinonen11 explores the extent to which the use of OpenAi’s Codex could produce programming exercises that are sensible, novel, and readily applicable, as well as exploring whether the same model could produce comprehensive and accurate code explanations.

Concerning programming exercises, the authors found that Codex could generate sensible and novel exercises, including sample solutions, but that only around 70% of exercises generated included test suites, and of those, only approximately 30% of the generated solutions passed the tests.

The authors found some encouraging potential with code explanations, but only 67.2% were deemed accurate when looking at line-by-line explanations.

At Codio, we envisage being able to improve on these results, in part by combining LLM-generated assessments and explanations—but coupling this approach with human oversight and refinement to produce readily usable resources, consistent with the principles of keeping humans in the loop, giving instructors control and being transparent on datasets.

AI grading assist

AI-assisted grading is already available in some commercially available products. Codio plans to add this capability that effectively curates previous feedback in a manual grading process.

We could see how an ML classifier can cluster student submissions and allow instructors to one-click assign feedback given on one student’s submission to another student where relevant. Instructors and TAs will benefit from a faster manual grading cycle, and in the future, we see the potential to introduce the same functionality to auto-grading course assessments.

AI assessment checker

Codio plans to help instructors AI-proof their assessments. This product development line builds on our broader product focus of “integrity by design”—loosely put, infusing the product with opportunities to design to engage students and keep their learning on track, thereby preventing or reducing motivators to cheat. Examples include random and parameterized assessments, feedback-rich auto-graders, automated “nudge” emails, and tools like code playback and visualization.

An integrated LLM could enable instructors to check whether the model correctly answers an assessment question, helping instructors to AI-proof their assessments. We also see the potential to auto-generate AI-proof questions where the check returns a correct solution.

Hint bot

Our team is also excited about using AI to accompany students on their learning journey—maximizing learning while mitigating risks of inappropriate use.

Here we see the potential for chatbot-like applications that can be deployed to ask students questions at moments where they are stuck, in ways that hint at potential approaches to become unstuck, or in ways that nudge students to recall an appropriate next step—kind of like a nudge to overcome writer’s block.

Conclusion

Expect more information on these developments in the near future. Codio is excited about the potential of AI in computing education to enhance learning experiences, from error message augmentation to AI-assisted grading, and we're dedicated to exploring these possibilities responsibly. As we move forward, we'll continue to prioritize research-informed approaches, ethical AI adoption, and the development of tools that truly serve the needs of educators and learners alike.

References

- Scary Smart, The Future of Artificial Intelligence and the Role You Need to Play, Mo Gawdat, September 2021, ISBN: 9781529077629

- ‘Godfather of AI’ Geoffrey Hinton quits Google and warns over dangers of misinformation, The Guardian, 2nd May 2023, https://www.theguardian.com/technology/2023/may/02/geoffrey-hinton-godfather-of-ai-quits-google-warns-dangers-of-machine-learning

- Matt Welsh, Communications of the ACM, January 2023, Vol. 66 No. 1, Pages 34-35, 10.1145/3570220. https://cacm.acm.org/magazines/2023/1/267976-the-end-of-programming/fulltext

- Barbara Jane Ericson. 2018. Evaluating the Effectiveness and Efficiency of Parsons Problems and Dynamically Adaptive Parsons Problems as a Type of Low Cognitive Load Practice Problem. Ph.D. Dissertation. Georgia Institute of Technology.

- Livemint, Fareha Naaz, 2nd June 2023, Harvard University’s popular online computer course to use AI for grading of assignments.

- Andrew Rice, Raspberrypi, HelloWorld, April 2023, “Using AI-powered developer tools for teaching programming.” https://helloworld.raspberrypi.org/articles/hw20-using-ai-powered-developer-tools-for-teaching-programming

- Sam Lau and Philip J. Guo. 2023. From "Ban It Till We Understand It" to "Resistance is Futile": How University Programming Instructors Plan to Adapt as More Students Use AI Code Generation and Explanation Tools such as ChatGPT and GitHub Copilot. In Proceedings of the 2023 ACM Conference on International Computing Education Research V.1 (ICER ’23 V1), August 7–11, 2023, Chicago, IL, USA. ACM, New York, NY, USA, 16 pages.https://doi.org/10.1145/3568813.3600138

- Yan et al., March 2023: Practical and Ethical Challenges of LLMs in Education: A systematic literature review.

- https://tech.ed.gov/ai/

- Ben Hutchinson, Andrew Smart, Alex Hanna, Emily Denton, Christina Greer, Oddur Kjartansson, Parker Barnes, Margaret Mitchell. 2021. Towards Accountability for Machine Learning Datasets: Practices from Software Engineering and Infrastructure. In Conference on Fairness, Accountability, and Transparency (FAccT ’21), March 3–10, 2021, Virtual Event, Canada. ACM, New York, NY, USA, 16 pages. https://doi.org/10.1145/3442188.3445918

- Juho Leinonen, Arto Hellas, Sami Sarsa, Brent Reeves, Paul Denny, James Prather, and Brett A. Becker. 2023. March 2023, Using LLMs to Enhance Programming Error Messages, In Proceedings of the 54th ACMTechnical Symposium on Computer Science Education V.1 (SIGCSE2023), March 15-18, 2023, Toronto, ON, Canada. ACM, NewYork, NY, USA, 7 pages. https://doi.org/10.1145/3545945.3569770

- Stephen MacNeil, Andrew Tran, Arto Hellas, Joanne Kim, Sami Sarsa, Paul Denny, Seth Bernstein, and Juho Leinonen. 2023. Experiences from Using Code Explanations Generated by Large Language Models in a Web Software Development E-Book. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V.1(SIGCSE2023), March 15–18, 2023, Toronto, ON, Canada. ACM, New York, NY, USA, 7 pages. https://doi.org/10.1145/ 3545945.3569785

- Sami Sarsa, Paul Denny ,Arto Hellas, and Juho Leinonen. 2022. Automatic Generation of Programming Exercises and Code Explanations Using Large Language Models. In Proceedings of the 2022 ACM Conference on International Computing Education Research V.1 (ICER2022), August 7–11, 2022, Lugano and Virtual Event, Switzerland. ACM, NewYork, NY, USA, 17 pages. https://doi.org/10.1145/3501385.3543957